You can download the Service Pack 4 for SQL Server 2005 from here. This is…

In a recent discussion it was suggested to me that not only is dynamic T-SQL useful for things like catch-all queries or some really hard to solve problems involving variable table lists, but is, in fact, a perfectly acceptable design pattern for all queries against a database. Note, in this case, we’re not talking about an ORM tool which takes control of the system through parameterized queries, but rather an intentional choice to build nothing but dynamic T-SQL directly on the system.

To me, this was immediately problematic. I absolutely agree, you’re going to have dynamic T-SQL for some of those odd-ball catch-all search queries. But to simply expand that out to include all your queries is nuts. There really is a reason that stored procedures exist, and it’s not to build dynamic T-SQL. First things first, we are talking about using sp_executesql so we can avoid problems with SQL Injection, although that should be the very first concern that comes from this methodology. But after that, you need to worry about your management of the system. Here’s an example query:

CREATE PROCEDURE [dbo].[SearchRecords] @searchQuery AS NVARCHAR(100), @col AS VARCHAR(100) AS BEGIN

SET NOCOUNT ON; DECLARE @SQL NVARCHAR(1000); DECLARE @value NVARCHAR(100);

SET @value = @searchQuery;

IF (@col = ‘PERSON_ID’) BEGIN SET @SQL = ‘SELECT * FROM dbo.Movie WHERE MovieId = @value’; END ELSE IF (@col = ‘FIRST_NAME’) BEGIN SET @SQL = ‘SELECT * FROM dbo.Movie WHERE MovieName = @value’;

END

EXEC sp_executesql @SQL,N’@value nvarchar(20)’,@value=@value;

END

GO

No chance of SQL injection with this, but there are other problems. The first one that comes up for me is that I’ve had to use a generic data type for @value, NVARCHAR(100). I’m passing that to both an ID and a NVARCHAR which means, when passing it to the ID I’m going to get a implicit conversion, possibly affecting index use for that part of the query. But it gets worse. Let’s execute the query twice, but I want clear the cache (please only do this on test systems):

DBCC FREEPROCCACHE();

GO

EXEC dbo.SearchRecords @searchQuery = N’42’, — nvarchar(100) @col = ‘PERSON_ID’ — varchar(100);

GO

EXEC dbo.SearchRecords @searchQuery = N’Serpico’, — nvarchar(100) @col = ‘FIRST_NAME’ — varchar(100);

GO

So now we have two different plans in cache. If I query the cache like this:

SELECT deqp.query_plan, deqs.execution_count, deqs.query_hash, deqs.query_plan_hash, dest.text, OBJECT_NAME(dest.objectid)

FROM sys.dm_exec_query_stats AS deqs

CROSS APPLY sys.dm_exec_sql_text(deqs.sql_handle) AS dest

CROSS APPLY sys.dm_exec_query_plan(deqs.plan_handle) AS deqp;

GO

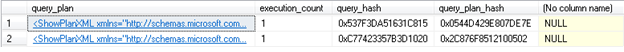

I get a set of results that looks like this:

Note the lack of of an OBJECT_NAME. This is because there is no objectid stored with the plan created by sp_executesql. So, if I had hundreds of procedures that generated hundreds or even thousands of different queries through a dynamic process like this, I have absolutely no way of know which of the hundreds of procedures generated which query without going through and doing text searches against the code. I have to worry about SQL injection and I’m going to be doing all kinds of crazy searches to find the code that I need to tune or modify? No, for me, this is a very poor design pattern and not one I would suggest people adopt.